Failure criteria

The Failure Criteria feature allows you to set the pass or fail criteria of your test for various metrics, such as response times, errors, hits per second, test duration, and so on.

- Enable and Define Failure Criteria

- Define Failure Criteria Using the Baseline

- Fail Tests against the Baseline when Running from Taurus

- Stop Tests when Running from Taurus

- Baseline-based Taurus failure criteria in BlazeMeter UI

Video demo

Learn how to set failure criteria within your test definition, enabling you to mark tests as pass or fail based on specified conditions.

Enable and define failure criteria

To enable failure criteria, follow these steps:

- In the Performance tab, click Create Test, Performance Test.

-

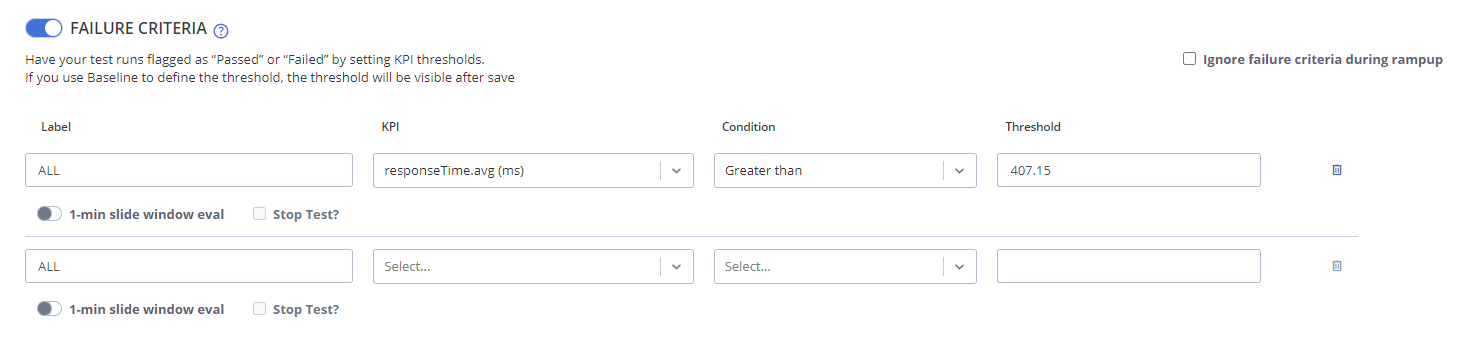

Scroll down to the Failure Criteria section and toggle the button ON.

The following parameters are available:

-

Label

Specify if you want to use this rule on a particular label from your script. It is set to ALL (all labels) by default.

-

KPI

Select the specific metric you want to apply a rule for. Expand the drop-down menu and review the available metrics to monitor.

-

Condition

To see the binary comparison operators for this rule, expand the drop-down menu. Operators include Less than, Greater than, Equal to, and Not Equal to.

-

Threshold

The numeric value you want this rule to apply to.

-

Stop Test

You can only select this option together with the 1-min slide window eval option.

When selected, the test will stop immediately after the first violation is detected. Otherwise, the test continues running uninterrupted until the test ends.

-

Delete Failure Criteria

Click the trash bin icon to delete the criteria.

-

1-min slide window eval

Select this option to configure the test to evaluate the failure criteria during the test run. The failure criteria are evaluated every 10 seconds for a period of 60 seconds instead of just once at the end of the test. The failure criteria will fail the test if at least one violation is found.

-

Ignore failure criteria during ramp-up (Advanced configuration drop-down)

Checking this box causes the test to ignore any failures that occur during ramp-up so that criteria are only evaluated after ramp-up ends.

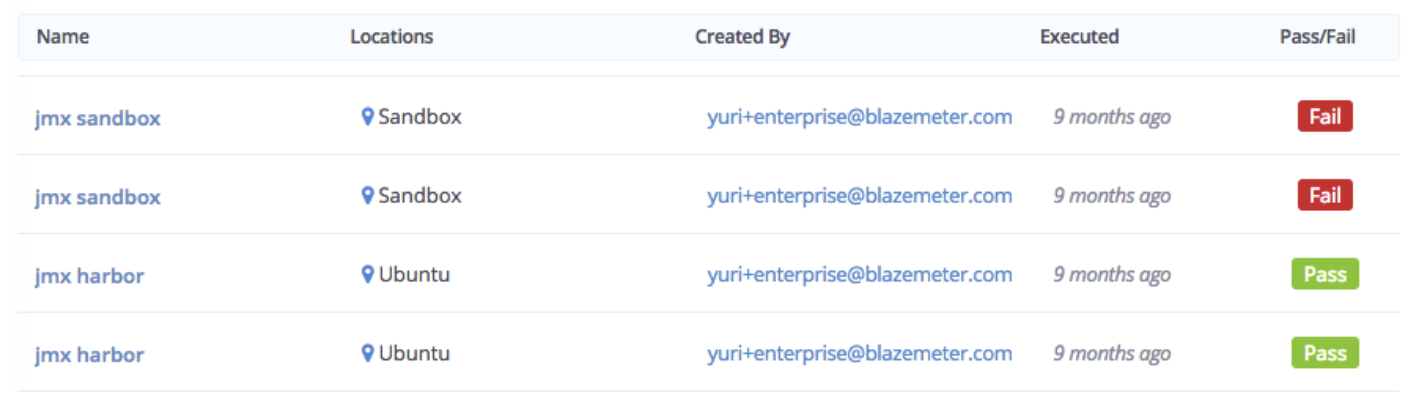

If implemented, the pass/failure results can be seen via the workspace dashboard, making it easier to monitor your testing over time. The dashboard is accessed by selecting the Workspace of interest from the Workspace menu drop-down list.

For Taurus tests (tests that use a YAML configuration file), the majority of Taurus pass/fail capabilities are translated into BlazeMeter failure criteria. This ensures that when a YAML script is uploaded to BlazeMeter, the pass/fail module in the script will automatically appear in the test UI. You can also execute a test from Taurus with cloud provisioning, and the pass/fail module will be recognized by BlazeMeter and displayed in the report.

Define failure criteria using the baseline

One of the most useful things about having a baseline to compare with, and fail the test in comparison to it (baseline-based failure criteria), is to have more accurate failures when automating your tests.

When you set a report as a baseline, you can also set the failure criteria against the baseline. If performance degrades compared to the baseline in a subsequent test, the test will fail automatically.

Follow these steps:

- Go to the Performance tab, click Tests and select a test from the drop down list.

The test reports window opens. - Click the Configuration tab and scroll down to the Failure Criteria section.

- Toggle the button for Failure Criteria on.

-

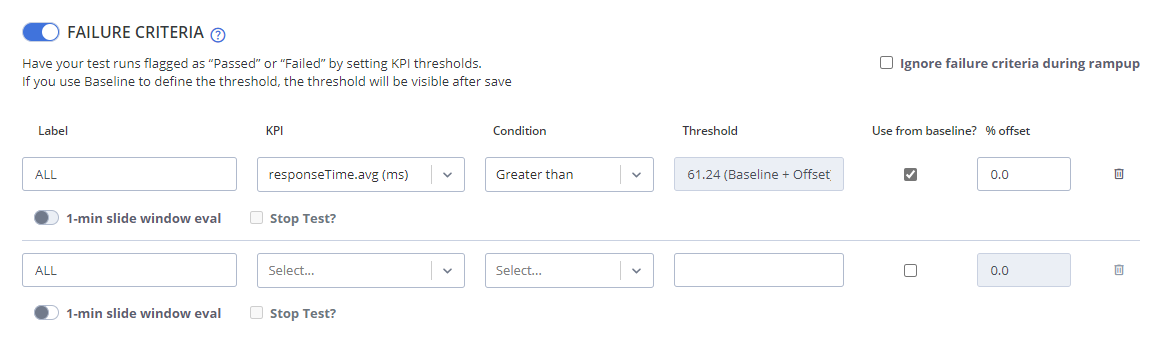

Check the box for Use from baseline. This checkbox is available only if there is a baseline selected for the test.

-

You can:

- Set the threshold for each failure criteria from the selected baseline.

- Define an offset from baseline you want to tolerate, so that minor deviation from baseline will not be defined as a failure.

If you use Baseline to define the threshold, the threshold will be visible after save.

Subsequent tests are compared against the baseline with these failure criteria. If performance degrades compared to the baseline and the defined offset, the test will fail automatically.

Fail fests against the baseline when running from Taurus

In this section, we will focus on defining baseline-based failure criteria in Taurus.

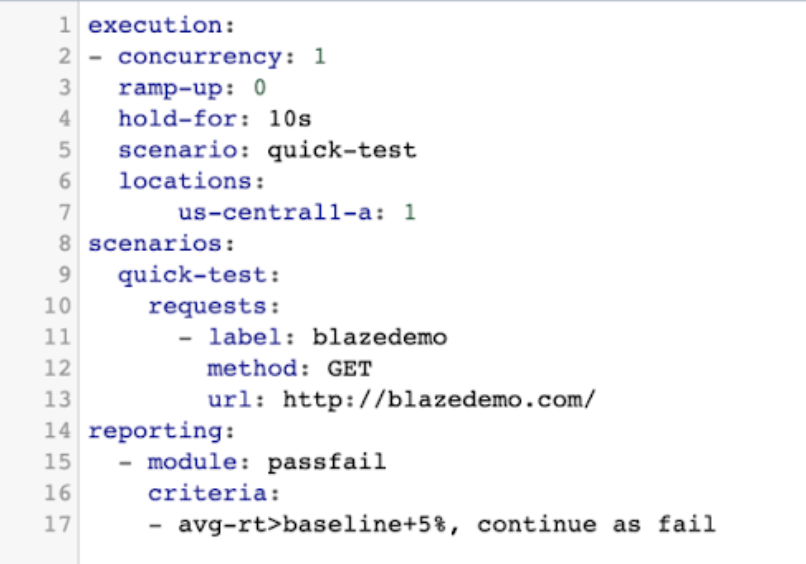

The failure criteria using the Baseline can be configured in the YAML and "translated" into BlazeMeter failure criteria.

Failing tests from Taurus in comparison to the baseline is only possible in case the test is already defined in BlazeMeter (not for new tests created via Taurus), and in case the test has a baseline defined in BlazeMeter (you can’t set a baseline for a test while running it in Taurus).

You can define the failure criteria in the YAML file. This can be done when:

- Running YAML test in BlazeMeter.

- Running existing Taurus tests from Command line in the cloud (in BlazeMeter) and not locally.

For each pass/fail criteria, you can define if it is taken from baseline and with which offset.

Example:

reporting:

- module: passfail

criteria:

- avg-rt>baseline, stop as failed

- avg-rt>baseline+5%, stop as failed

- hits<baseline-5%, stop as failed

The above example uses baseline as a threshold for the criteria.

The format is the following:

avg-rt>baseline+5%

[KPI] [Condition] baseline [Offset]

Explanation:

- KPI: the KPI that will be compared

- Condition: the comparison operator, one of >, <, >=, <=, =, == (same as =).

- Baseline: the phrase “baseline” indicates that the value to compare with (the threshold) is taken from the baseline.

- Offset: the percent of deviation from baseline that should be tolerable and not fail the test

The following validations apply on the Condition and Offset:

- If the condition is > or >=, the offset must be defined, with a "+" sign.

- If the condition is < or <=, the offset sign must be defined, with a "-" sign.

- If the condition is = or == , offset must not be defined.

In case validation failed or syntax is incorrect, the pass/fail criteria will not be recognized and enforced by BlazeMeter.

Stop tests when running from Taurus

You can configure tests to stop from Taurus and YAML scripts.

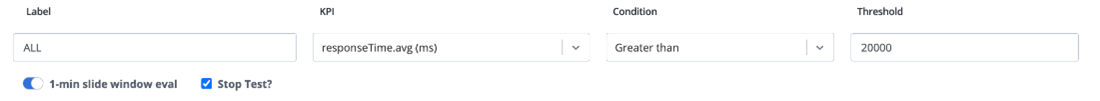

In a Taurus YAML script, you can configure a test to stop in the event that it reaches a threshold, by defining the timeframe value and stop action. timeframe is converted in BlazeMeter to the 1-min slide window eval option and stop to the “Stop Test? option.

In the pass/fail module in the YAML script, set timeframe to for 1m (for 60s is also valid), and define the test to stop with one of the following actions: stop as fail/stop as failed/stop.

The following are examples of pass/fail modules configured to stop a test:

avg-rt>20s for 1m, stop as fail

avg-rt>20s for 60s, stop as failed

avg-rt>20s for 1m, stop

For all the above examples, the failure criteria in BlazeMeter will look as follows:

You can also configure the test to continue even if it reaches the threshold, if you use continue instead of stop. For example:

avg-rt>20s for 1m, continue

- Timeframes other than

forare not supported by BlazeMeter. In this case, thepass/failmodule is converted to a failure criteria without the 1-min slide window eval and Stop Test? options. - Timeframes other than one minute (60 seconds) are not supported by BlazeMeter. In this case, the

pass/failmodule is converted to a failure criteriawithout the 1-min slide window eval and Stop Test? options. - The test result (

pass,failed) cannot be configured through Taurus. In BlazeMeter, the test result is calculated based on the failure criteria. If at least one threshold is reached, the test result isfailed. If you use Taurus syntax to set the test result topass(for exampleavg-rt>20s for 1m, stop as pass), it is ignored.

Baseline-based Taurus failure criteria in BlazeMeter UI

Like other Taurus pass/fail criteria, baseline-based criteria in YAML are translated to BlazeMeter failure criteria and appear in the test configuration UI.

To avoid issues, consider using lower percentile values, such as P99 or P95, in your test configurations. We recommend reviewing your test scripts to ensure compatibility with BlazeMeter's supported percentile range.

- KPI: the Key Performance Indicator defined in the pass/fail criteria

- Condition: the comparison operator in the pass/fail criteria will be translated to the parallel phrase, e.g. “>=” will be translated to "Greater than or equal to".

- Threshold: the value is calculated from the baseline and offset. For example, if average response time in baseline is 100 ms and the criteria had a “+5%” offset, the threshold would be baseline+5% which is 105 ms.

- Use from baseline?: true

- % offset = the percentage defined in the pass/fail without the +/- sign. The +/- sign will be used to add or reduce the offset percent from the baseline when calculating the threshold.

Jump to next section: