New Relic insights into API Monitoring

New Relic is a real-time analytics platform. By connecting New Relic with BlazeMeter API Monitoring, you can collect metrics from your API tests and transform them into actionable insights about your applications in New Relic.

Prerequisites

-

You have a New Relic account.

-

You have set up an API Monitoring Test in BlazeMeter whose information you want to send to New Relic.

For more information, see the New Relic documentation.

Setup

You need to get two values from New Relic to integrate it with BlazeMeter: an Account ID and a License Key.

Get New Relic Account ID and License Key

Follow these steps:

- Log in to your New Relic account.

- Open the API Keys page in your New Relic account.

- Copy your Account ID and the License Key for use in the next steps.

Activate New Relic for BlazeMeter API Monitoring

Follow these steps:

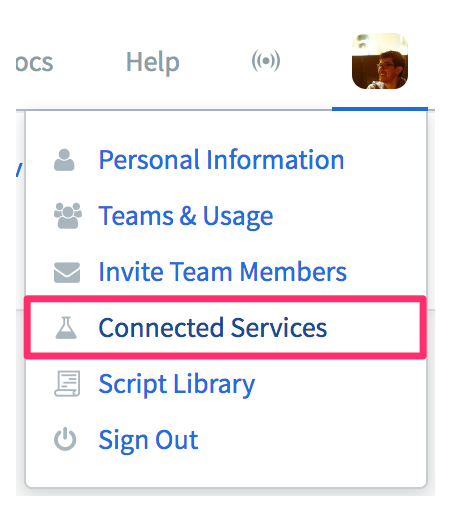

- In your BlazeMeter API Monitoring account, click your profile on the top-right, and select Connected Services.

-

Find the New Relic Insights logo and click Connect New Relic Insights.

-

Select your data region.

-

Enter your New Relic Account ID that you copied previously.

-

As Insert Key, enter your New Relic License Key that you copied previously.

-

Click Connect Account.

-

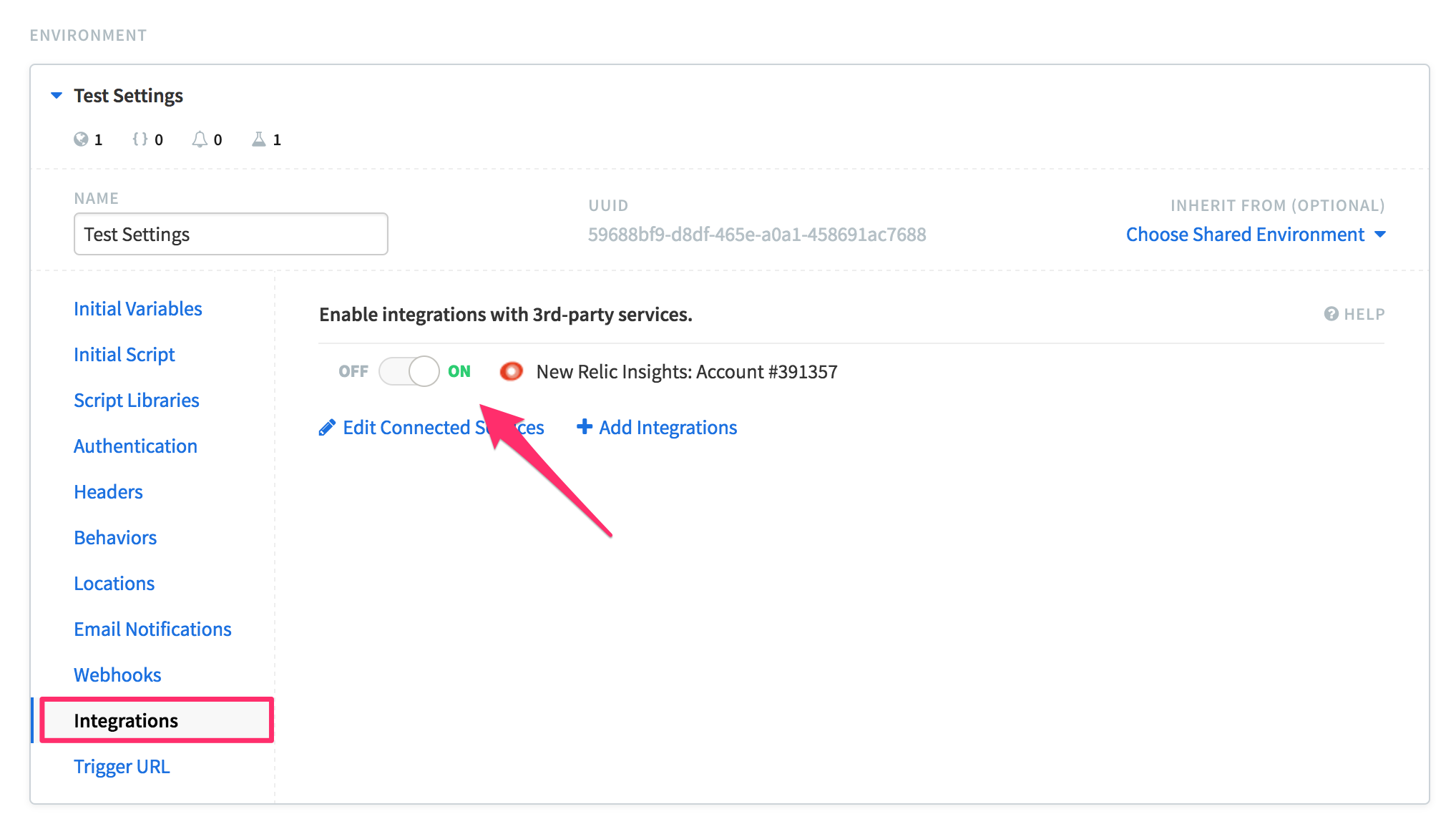

Open the API test and click Editor.

-

Expand Test Settings, click the Integrations tab in the left-hand menu, and toggle the New Relic Insights integration on.

Run NRQL queries in New Relic

To try these sample NRQL queries, select the Query Your Data screen from within your New Relic dashboard. You can filter any of these by bucket (WHERE bucket_name = 'My Bucket') or test (WHERE test_name = 'My API Tests') as needed.

Test Result Counts for Past Week

SELECT count(*) FROM RunscopeRadarTestRun FACET result TIMESERIES SINCE 1 week ago

Assertion Pass/Fail Counts by Day for Past Week

SELECT sum(assertions_passed), sum(assertions_failed) FROM RunscopeRadarTestRun SINCE 1 week ago TIMESERIES 1 day

Response Times Percentiles Over Past Day

SELECT percentile(response_time_ms, 5, 50, 95) FROM RunscopeRadarTestRequest TIMESERIES SINCE 1 day ago

Response Times Histogram Over Past Week

SELECT histogram(response_time_ms, 1000) FROM RunscopeRadarTestRequest SINCE 1 week ago

Response Status Codes for Past Day

SELECT count(*) FROM RunscopeRadarTestRequest SINCE yesterday FACET response_status_code TIMESERIES

Event reference

There are two types of events generated at the completion of every test run: RunscopeRadarTestRun which summarizes the entire run and RunscopeRadarTestRequest for each individual request. An explanation of each of the fields is below. The identifier is the name to use for the given attribute in NRQL queries.

RunscopeRadarTestRun

| Friendly Name | Identifier | Description |

|---|---|---|

| Test Name | test_name

|

The name of the test. |

| Test ID | test_id

|

The unique ID for the test. |

| Test Run ID | test_run_id

|

The unique ID for the individual test run instance. |

| Result | result

|

The outcome of the test run, either 'passed' or 'failed'. |

| Started At | finished_at

|

The timestamp when the test run started. |

| Finished At | finished_at

|

The timestamp when the test run completed. |

| Assertions Defined | assertions_defined

|

The number of assertions defined for all requests in the test. |

| Assertions Passed | assertions_passed

|

The number of assertions that passed in this test run. |

| Assertions Failed | assertions_failed

|

The number of assertions that failed in this test run. |

| Variables Defined | variables_defined

|

The number of variables defined in this test run. |

| Variables Passed | variables_passed

|

The number of variables that passed in this test run. |

| Variables Failed | variables_failed

|

The number of variables that failed in this test run. |

| Scripts Defined | scripts_defined

|

The number of scripts defined in this test run. |

| Scripts Passed | scripts_passed

|

The number of scripts that passed in this test run. |

| Scripts Failed | scripts_failed

|

The number of scripts that failed in this test run. |

| Requests Executed | requests_excuted

|

The number of requests executed for this test run. |

| Environment Name | environment_name

|

The name of the environment used in this test run. |

| Environment ID | environment_id

|

The ID for the environment used in this test run. |

| Bucket Name | bucket_name

|

The name of the API Monitoring bucket the test belongs to. |

| Bucket Key | bucket_key

|

The unique key for the API Monitoring bucket the test belongs to. |

| Team Name | team_name

|

The name of the API Monitoring team this test belongs to. |

| Team ID | team_id

|

The unique ID for the API Monitoring team this test belongs to. |

| Agent | agent

|

The agent used to execute this test run, or null if a default API Monitoring location was used. |

| Region | region

|

The region code for the location the test was run from, or null if an agent was used. |

| Region Name | region_name

|

The full region name and location the test was run from, or null if an agent was used. |

| Initial Variables | Each of the initial variables (as they were after the initial scripts and variables have been processed but prior to the execution of the first request) is added as an attribute using the name of the variable as the key, and the value of the variable. | |

RunscopeRadarTestRequest

| Friendly Name | Identifier | Description |

|---|---|---|

| Test Name | test_name

|

The name of the test. |

| Test ID | test_id

|

The unique ID for the test. |

| Test Run ID | test_run_id

|

The unique ID for the individual test run instance. |

| Request URL | request_url

|

The URL that was requested for this test step. |

| Request Method | request_method

|

The HTTP method used for this request. One of GET, POST, PUT, PATCH, DELETE. |

| Response Status Code | response_status_code

|

The HTTP status code for the response if the request completed. |

| Response Size Bytes | response_size_bytes

|

The size of the HTTP response body. |

| Response Time | response_time_ms

|

The time it took for the server to respond, in milliseconds. |

| Started At | finished_at

|

The timestamp when the test run started. |

| Finished At | finished_at

|

The timestamp when the test run completed. |

| Assertions Defined | assertions_defined

|

The number of assertions defined for this request. |

| Assertions Passed | assertions_passed

|

The number of assertions that passed for this request in this test run. |

| Assertions Failed | assertions_failed

|

The number of assertions that failed for this request in this test run. |

| Variables Defined | variables_defined

|

The number of variables defined for this request. |

| Variables Passed | variables_passed

|

The number of variables that passed for this request in this test run. |

| Variables Failed | variables_failed

|

The number of variables that failed for this request in this test run. |

| Scripts Defined | scripts_defined

|

The number of scripts defined for this request. |

| Scripts Passed | scripts_passed

|

The number of scripts that passed for this request in this test run. |

| Scripts Failed | scripts_failed

|

The number of scripts that failed for this request in this test run. |

| Environment Name | environment_name

|

The name of the environment for this request used in this test run. |

| Environment ID | environment_id

|

The ID for the environment used for this request in this test run. |

| Bucket Name | bucket_name

|

The name of the API Monitoring bucket the test belongs to. |

| Bucket Key | bucket_key

|

The unique key for the API Monitoring bucket the test belongs to. |

| Team Name | team_name

|

The name of the API Monitoring team this test belongs to. |

| Team ID | team_id

|

The unique ID for the API Monitoring team this test belongs to. |

| Agent | agent

|

The agent used to execute this test run, or null if a default API Monitoring location was used. |

| Region | region

|

The region code for the location the test was run from, or null if an agent was used. |

| Region Name | region_name

|

The full region name and location the test was run from, or null if an agent was used. |