Creating an API Functional Test

We are building something new

Starting February 2022, the API Functional testing feature has been deprecated. Depending on your subscription plan, you may still be able to run existing tests but can no longer create new ones. Please use BlazeMeter API Monitoring to create and run your API Functional Tests going forward.

Depending on your subscription plan, you may still be able to run existing tests but can no longer create new ones.

BlazeMeter's API Functional Test allows you to test whether your APIs work as expected.

- Creating the Test

- Adding Requests

- Modifying Requests

- Adding Query Parameters

- Adding Headers

- Adding a Body

- Adding Assertions

- Extracting From Responses

- Adding Scenarios

- Scenario Options

- Common Functions

- Negative Testing

Creating the Test

- Click the "Functional" tab in the navigation bar in order to view functional tests and access functional test features. For more information on Functional and Performance tests and how these tabs filter tests, please review the Getting Started guide.

- Click the "Create Test" button.

- Click the "API Functional Test" button.

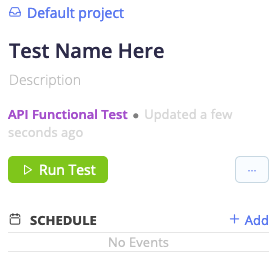

- On the left-hand side, provide a name for your test and, optionally, a description and schedule.

At this point, you now have four options for building your new API Functional Test:

- This guide walks you through how to create the test purely via the UI and without scripting anything.

- If you would prefer to write your own new script from within the UI, refer to our guide on Scripting an API Functional Test in the UI.

- If you already have your own script and prefer to use it, refer to our guide on Creating an API Functional Test Using an Existing Script.

- If you would like to record a script to use for API testing, then consider using either using BlazeMeter's Chrome Extension or Proxy Recorder. Once you've recorded your script, you can then use it via our above-referenced guide on creating a test using an existing script.

You may either create the test in the UI or upload an existing script, but you cannot do both simultaneously.

If you're sticking with the first option, then read on!

Adding Requests

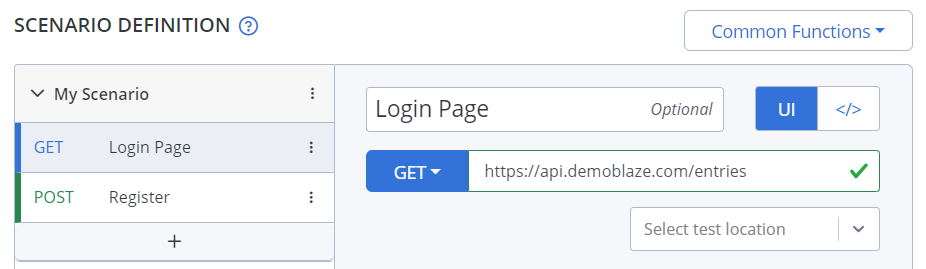

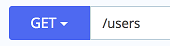

On the right-hand side, you'll find the Scenario Definition. Provide test details here such as the URL to test, URL label, and if the request will GET/POST/PUT/etc.

You can create a sequential chain of multiple requests in the lefthand column, which can be useful when you want to run through a chain of multiple API calls. This is done by clicking the plus ("+") button below your current request.

Alternatively, you can click the vertical column of three dots to the right of an existing request to open a drop-down menu, which will allow you to either duplicate or delete that request.

Modifying Requests

Once you have created a new request in the left-hand column, you can access a wealth of options for modifying the request in the right-hand column. Here, you can change the name, type of request, and various other options provided by additional tabs.

For example, when a new request is created, it defaults to a "GET" request. But this can be changed to a different type of request (such as "POST", "PATCH", etc.) by clicking the blue "GET" button to open a drop-down menu.

Additional options for the request can be accessed via the options tabs underneath the URL field.

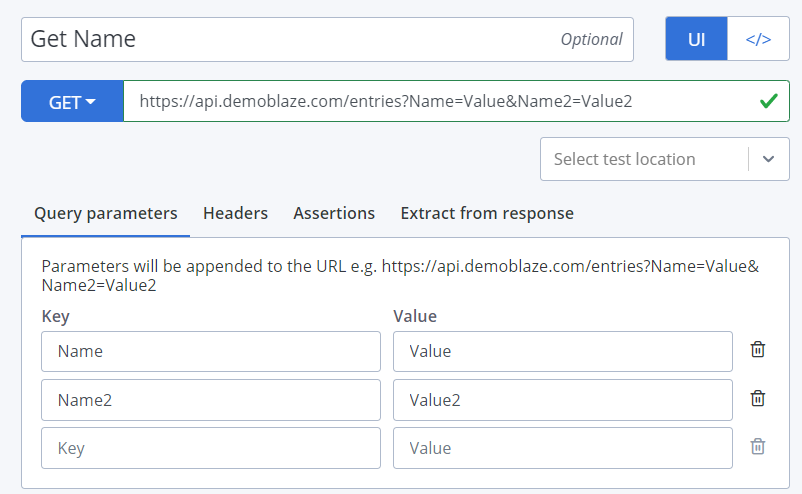

Adding Query Parameters

The Query Parameters tab provides option fields for adding parameters to an API call for querying specific data. The Query Parameters tab is selected by default, and it needs to be selected for it to work.

Consider the following example: The API endpoint "https://api.demoblaze.com/entries" allows the use of the "Name" and "Name2" keys to query name values. If you were to perform a CURL to pass this query to the endpoint, it would look like "https://api.demoblaze.com/entries?Name=Value&Name2=Value2".

Instead, you can save time and effort by simply entering the key and value into their respective fields under the Query Parameters tab. BlazeMeter will create the API call for you, automatically appending the parameters to the end of the URL.

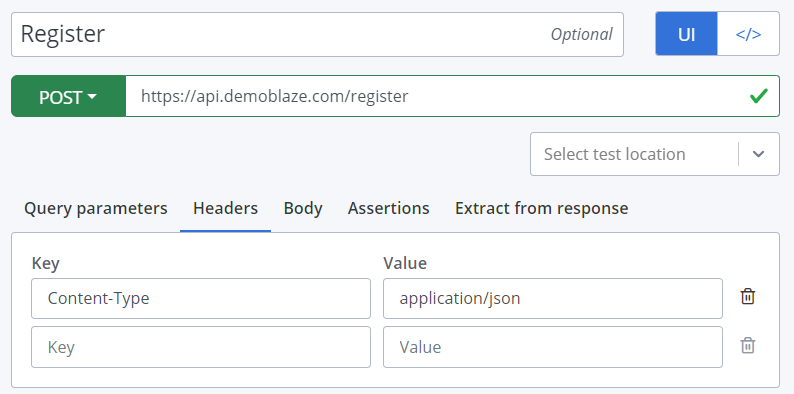

Adding Headers

Under the Headers tab, you will find "Key" and "Value" fields for adding HTTP headers to your API call. This may be required by your application server.

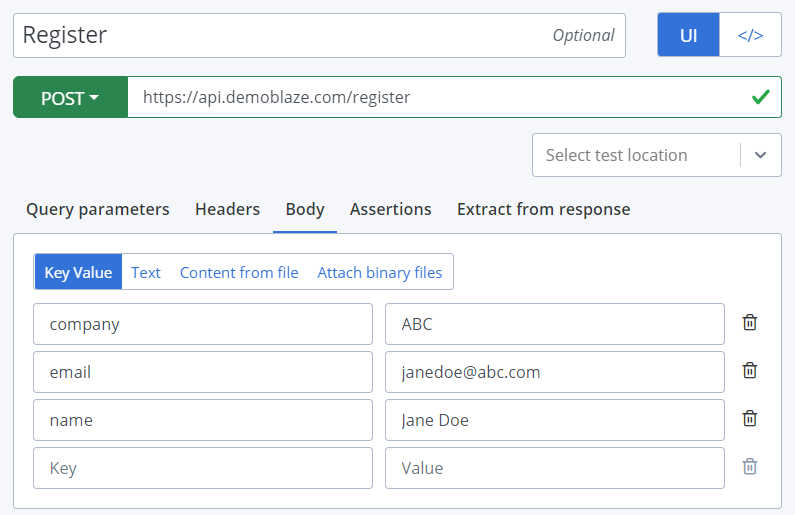

Adding a Body

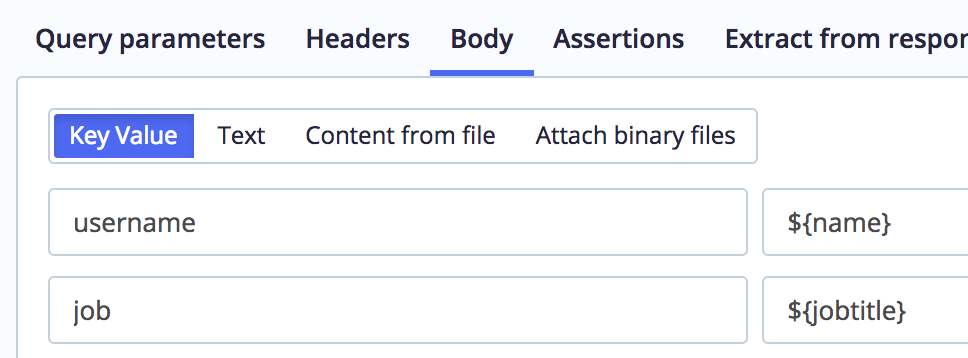

The Body tab will only appear if the request type is one in which body data may be sent. For example, for a "GET" request, there will be no Body tab available, but for a "POST" request, it will appear as an option.

Body data can be entered in one of the following formats:

- Key Value - If the application server requires body data to be sent via specific keys.

- Text - For entering raw text, such as JSON content, for example.

- Content from file - If you already have a file containing the required body data, it may be uploaded here.

- Attach binary files - This option consists of three fields: a parameter name field, an option to upload a file for the parameter, and a field for providing the mime-type (if let blank, it will be determined automatically).

Adding Assertions

The Assertions tab can be used to add assertions to verify the existence of specific data in the response.

Follow these steps:

-

Click the "Choose type" field.

A drop-down menu appears, showing various options to choose from. -

Fill in the required data into the required fields that appear depending on the type chosen.

-

Click the "Add" button to create the Assertion. You can add multiple assertions, each of a different or same type.

If an assertion fails, it will appear under the "Failed" tab of the test report. In the report, click the assertion to expand specific failures.

Extracting from responses

The Extract from response tab provides the option to extract data from the response, and then store it in a variable for future use.

Follow these steps:

-

Click the "Choose type" field to access a drop-down menu, select the preferred extraction type, and click the "Add" button.

-

Provide a name for the variable to be created.

- Enter data to extract into the "Value" field, depending on the type you selected.

-

Click the "Copy" button on the right side of the "Variable name" field to copy the variable into the clipboard.

-

In a later request, you can paste the variable into a URL or other request field. The variable appears in the format of "${variable}".

These advanced options that are available through the Scenario Definition provide a simple, time-saving method for quickly creating an automating more complex URL/API tests.

Adding Scenarios

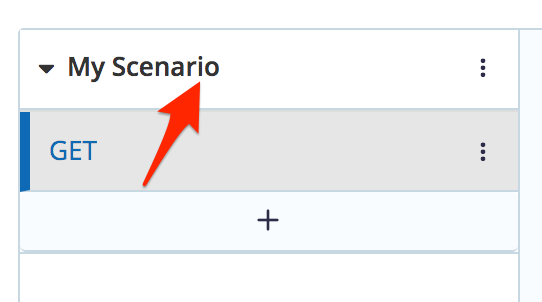

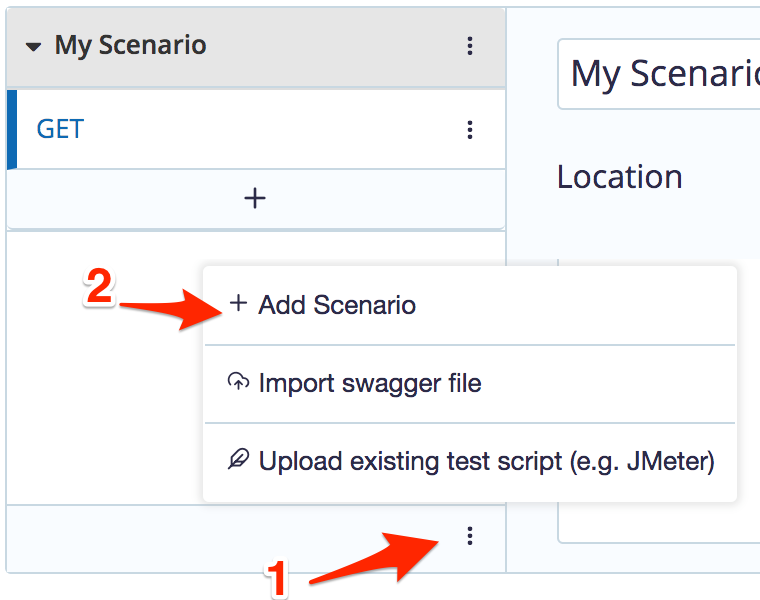

An API Functional Test can contain multiple scenarios. To create an additional scenario please open the menu at the bottom of the left side

Only a single scenario will be expanded at a time. To expand another scenario, simply click on the scenario name on the left side.

Scenario settings screen

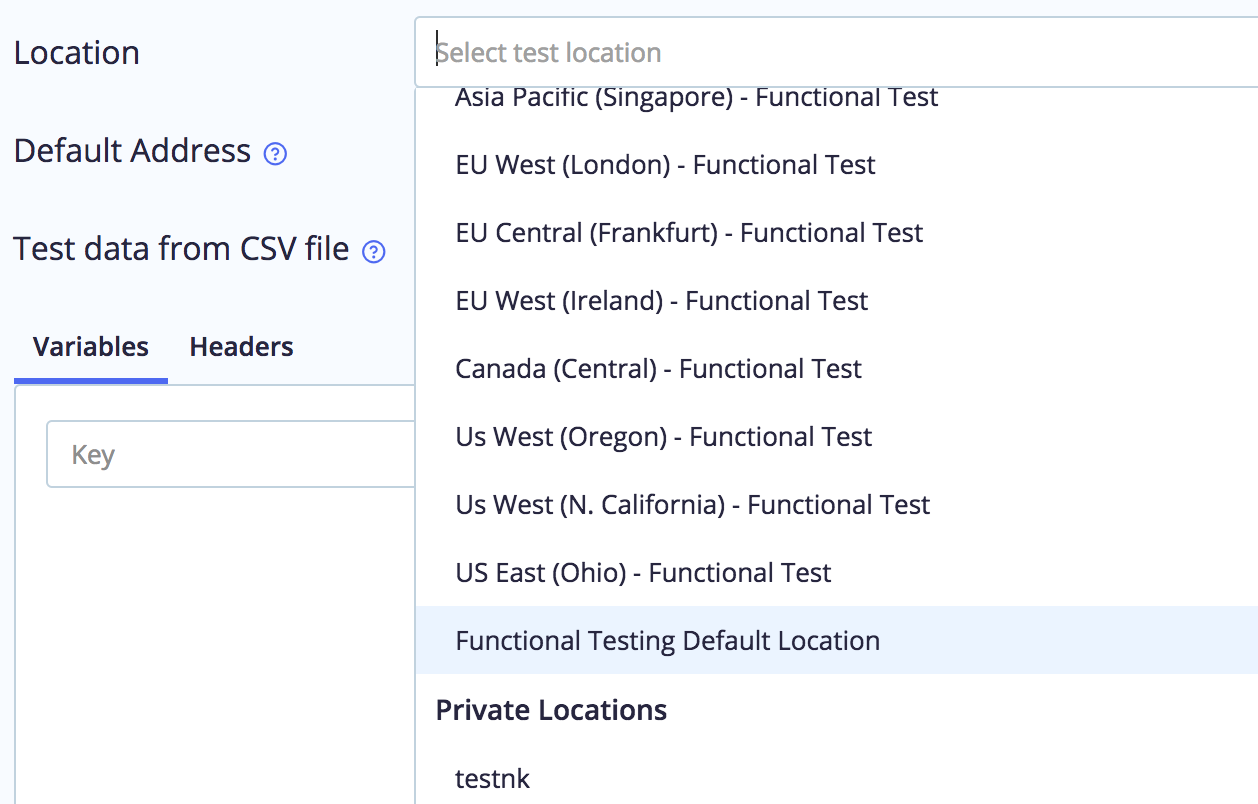

The scenario settings screen allows you to set configurations that are applied to all requests within the scenario such as "Default Address", "Variables" and "Headers". Furthermore, you can select the location that the scenario will be executed. Simply click on the scenario name ("My Scenario" in this example screenshot) to display the scenario settings screen.

Scenario Options

Click the scenario name ("My Scenario" by default) to configure a number of optional settings in the the scenario settings screen:

- Scenario name: The default name is "My Scenario", which you can change here.

- Templates: Templates are a great way to make creating tests faster and easier, including templates for different types of authentication.

- UI / </> Toggle: When "UI" is selected, you build the test in the web interface.

Whereas when "</>" is selected, you instead script the test by writing a YAML script.Selecting this scripting option will remove all other UI-related options.

- Location: Select a location to run the test from.

For more information about how to allow IPs for the locations available, see Allowlisting BlazeMeter Engines.

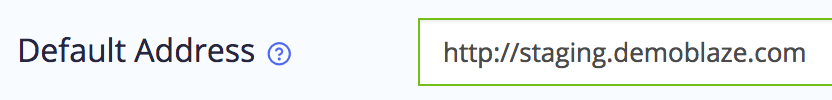

For more information about how to allow IPs for the locations available, see Allowlisting BlazeMeter Engines. - Default Address: Here you can enter a default address that will be used for all requests in the scenario. This allows you, for example, to easily switch from a QA to a staging environment by changing a single URL (e.g. from http://qa.demoblaze.com to http://staging.demoblaze.com).

Once you've entered a default address, you only need to enter the endpoint in your requests, instead of the entire URL.

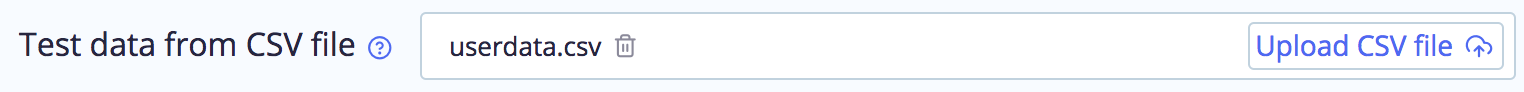

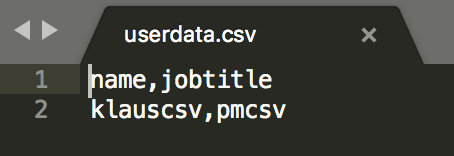

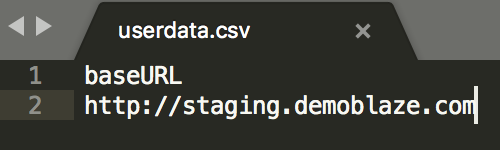

- Test data from CSV file: Here you upload a CSV file. The file must have the .csv suffix and contain the comma-separated data that you want to use in your test. Currently, only a single file, and a single row of data are supported.

The first row of your CSV file will be used as variable names, in this example, "name" and "jobtitle".

You can then reference the values of the second row anywhere in your test with variable syntax such as ${name} and ${jobtitle}.

Tip: You can set the base URL dynamically using variable data from a CSV file. For example, you can define a base URL in your CSV file, then use it as the default address.

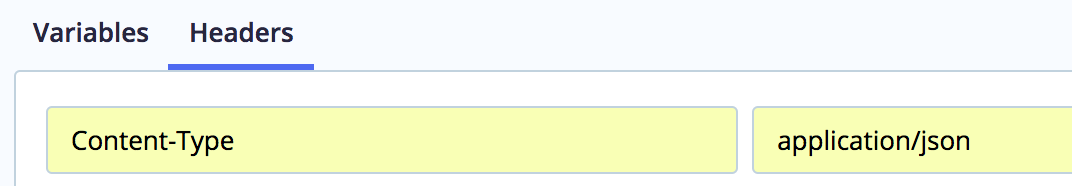

- Variables / Headers: You can define variables and headers that will be applied to all requests in the scenario. Example usages include specifying a username that you want to use for multiple requests or a header that applies to all requests.

- Virtual Services Configuration: For more information about how to configure your system under test to call virtual services instead of real services, see Adding a virtual service to a test.

- DNS Override: For more information about how to point your test at an alternate server without editing the script, see DNS Override.

Now you are ready to run the API functional test.

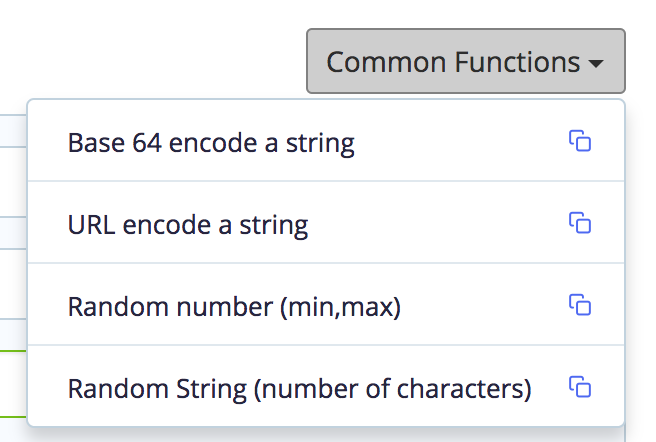

Common Functions

The Common Functions dropdown offers some helper functions that make it easier to create certain requests. Click the Copy button to copy an example of a function that implements base64 or URL encoding, or generating a random number or string. Now you can paste the function into any field and define its arguments.

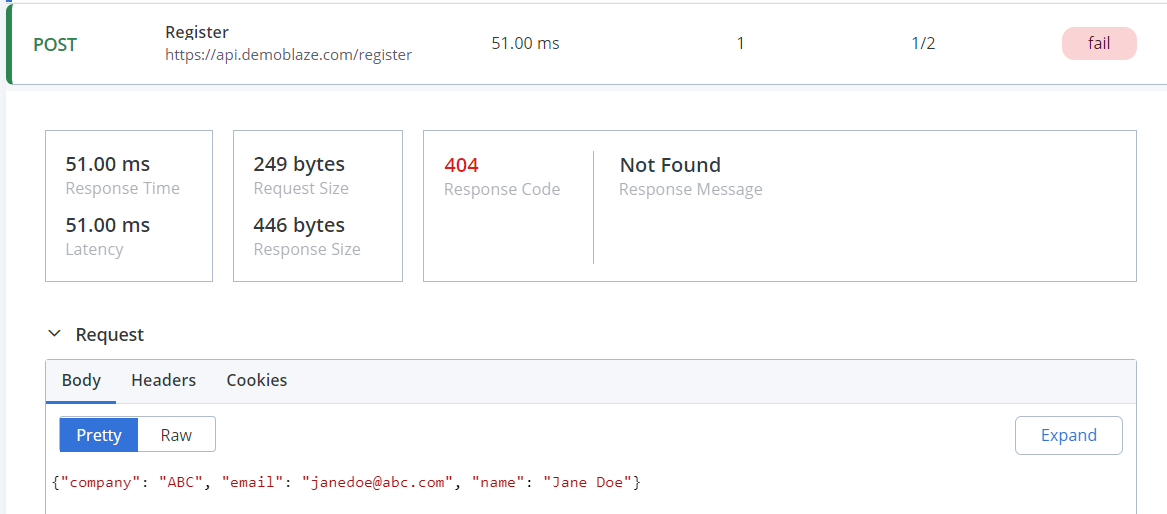

Negative Testing

BlazeMeter by default will mark a test as "failed" if the response code returned is anything other than a 2xx code (such as a "200" code, meaning "OK"). This means that any other response code received, such as a 4xx code (such as a "400" code, meaning "not found"), will result in marking the test as "failed".

BlazeMeter behaves this way by default because this is the most common (and simplest) use case scenario for functional testing. You are not required to stick with this default setting, however.

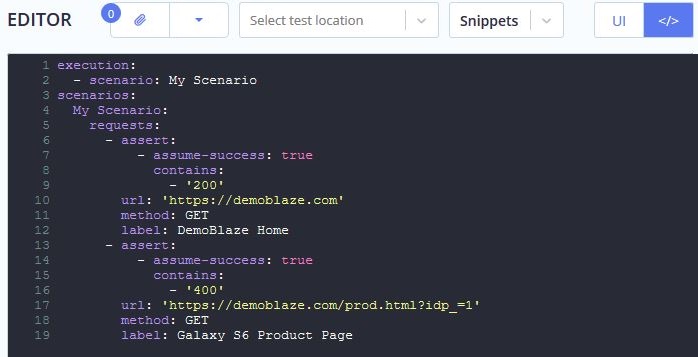

A negative test is a test in which the behavior is inverted. In BlazeMeter, this means you can use the "assume-success" option to change what response codes will result in a test being marked as "failed".

You must use the script editor in order to implement this option. For details on how to use BlazeMeter's script editor, please refer to our guide, Scripting an API Functional Test in the UI.

While editing the script, add an "assert" line that includes "assume-success: true", then set the "contains" argument to whatever response code you want to set for your assertion.

Consider the example script below, where the first request sets an assertion to a "200" code, followed by a second request which sets its assertion to a "400" code.